Marco helped me two years ago in a dimensional model design problem. He probably does not remember me, but I’m following his work, mainly all the stuff relating to dimensional modelling. Marco published last week the first draft for an alternative purpose to Inmon and Kimball approaches. I spent yesterday and today reading all the 44 pages of this first draft. And came to me all the issues and problems that I felt in the past… it’s really a very good approach not only for BI professionals using Microft tools.

I’m comment Marco Russo and Albert Ferrari draft, only because I want to contribute with my opinion for their project and advertise it. And I would be happy if professionals and friends like Subhash, Bruno, Amin, Tomislav, Alex, Ella and all other experts give their opinions about this subject.

It’s an approach near the real world and my next comments are only to clarify myselft and make this draft even better. Like they say in the draft:

“Always remember that these are not fixed and immutable rules. Your specific implementation of the architecture will probably be constrained by other considerations. You – as the BI analyst – are the one and only person that will be able to take these decisions in a correct way. “

Download the draft in the official website

Some comments/questions

1. The main use of the configuration database is for dealing with bookkeeping information and with data that come from more than one source? An example is to deal with the same customer that comes from two different sources with a different name… Which more funcionalities this configuration database could have? I think this configuration database should be shared with several BI solutions and use SQL schemas to split them. If you have one database configuration for each BI solution you’ll have more cost and more work to maintain it.

2. I’m agree that mirror database, filters only the required data, and the staging area is a relational database that will be a bridge between mirror and datawarehouse denormalized database. If you need to process all the cubes again, datawarehouse database stores all the historical data or you need to go again to mirror database or operational sources?! (sometimes the historic data in operational sources is available only once)

3. SQL Schemas could create problems in third party products, and I always avoid them. What you think about it? In Data warehouse or Data Mart databases you change the schemas used in Staging Area, could be good for understand the solution, but I’m afraid that could be an issue in ETL and OLAP designs. What you think?

4. Your definition of Metadata OLTP DB makes me a confusion… do you have views to OLTP operational systems to work as a bridge to DW in case of not using a staging area, but normally metadata is contextualizing your data… describing what each field, each table, each database is … I think you could explain this better.

5. Following your draft, in Kimball approach, the Data Warehouse database has a denormalized structure in spite Inmon aproach that has a normalized structure. Am I correct? There is some confusion in this point…

6. I don’t think that in SCD type 2 the status column should be used. I only understand the use of this column in this type of dimensions, only for users that goes inside database and need a fast understand of the current row, because in practice, if you lookup for the status column, you may have a problem if you need to import rows from a previous date (late arriving facts)… and you will map this rows to the status and could not be true

7. My defition of ODS was a little different. Your ODS description (only used by softwares) may cause several views of “true”. What do you think?

8. I’m completed agree with you about Natural and Surrogate Key in date dimension. In my last project I used Surrogate Key as an identity integer, that make me felt lost. I always needed to join the fact table with date dimension to understand the mean of each fact.

9. In page 36 when you wrote “Mirroring is not part of the ETL. “, I think create the mirror is the extract step of an ETL (ExtractTransformLoad)… you don’t do any transformations… only the basics like change column names and extract the data, and if you can apply some filters. Changing column datatype could generate an error… and we must avoid accessing source operational systems more than once

10. In pag 42, in which database you store logs added by SSIS Log provider? (Sysdtslogs). I like the answer you gave to the question “what is the difference between log and audit?” and also the use of audit information directly in the cubes but hidden for business users. Some people are a little confused in this subject.

11. How BI analysts control the data lineage? And how we can make an impact analyst on a change in a database?

Improvements

1. I think could be interesting include some standards in database objects (tables, views and stopred procedures names). Which nomenclatures you normal use to each object in each database (Mirror, Staging, ODS and DW e DMart)?

2. More focus on business and technical metadata of each database.

3. Create an addittional shared database for SSAS cubes performance using used based optimization. (Activating query logs of olap cubes and creating aggregations based on those logs)

4. In configuration database described, could be interesting the use of data profiling

5. Include a data mining structure

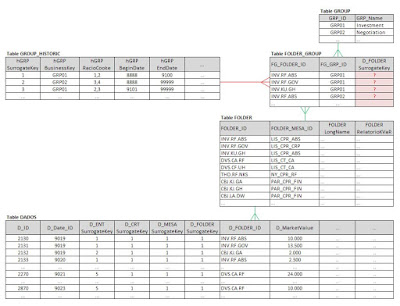

The original image was mofified by me and got it from draft 1.0.

I think that some comments could be basic for some of you, but because there aren’t any standards and any ISO for this subject, BI Analyst still some has doubts about the best pratiques to follow implementing a BI solution

So, I hope to receive Marco and Albert here to clarify me the points described before. It’s a very important step that these guys are doing, because they are creating rules, standards and make we speak the same language.

Good Luck!!

Updated 29-09-2008 with Albert Ferrari response. I will reply in the comments section.

Albert and Marco response:

Pedro,

I'll try to give you some answers, even if the space here is not enough for a complete discussion:

1. Yes, the config DB can be shared between different solutions for the same customer even because, in the DWH world, "different solutions for one customer" means "One complex solution for one customer, composed of different subject areas". :)

2. In some situation we store in the OLTP Mirror several snapshots of OLTP tables, in order to be able to rebuild the history. Clearly the DWH contains all the history but, sometimes, you will need to rebuild the DWH too...

3. I would not avoid schemas in order to satisfy third party tools. If some tool need to query the DWH then it will have its views and, if it cannot use schemas, then its views will live in the dbo schema.

4. OLTP Metadata is just a set of views. If you cannot build them in the OLTP DB and you cannot afford an OLTP Mirror, then an OLTP Metadata might help.

5. The Kimball approach is de-normalized but the extent of normalization that is needed in an Inmon one is not very clear, it all depends from the analyst. Inmon leaves all these "details" to the analyst, giving just some advices.

6. I agree with you. What else could I say? :)

7. What is your definition of ODS?

8. Yep. Even if, sometimes, I had to change the key, using some very creative way of expressing a date. If you have several billions rows then the INT representation of YYYYMMDD might be a problem and a smallint solution might be helpful. Clearly 20080101 is not a smallint... so you'll need some differente ways to express the date.

9.I don't understand your note. During mirroring you should not do any kind of transformation and/or column rename. If a column is called C1P8D3B0 ans is of type INT, then you will have that column name and type in the OLTP mirror and a VIEW that will change name and type when you will extract from the mirror.

10. I think the log DB is the perfect place for thos logs.

We are about to publish a second whitepaper that might be interesting to look at, it will provide a demostration of the methodology on the very (ab)used AdventureWorks DB.

Thanks alot for your comments, I will use them and your improvement section for a future review of the whitepaper.

Alberto